搭建过程bug记录

搭建过程bug记录

# bug1 (本地出现有老版本的k8s未卸载)

按照文档编写后,通过命令kubeadm init --config kubeadm.yaml初始化集群,出现以下输出:

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all running Kubernetes containers by using crictl:

- 'crictl --runtime-endpoint unix:///run/containerd/containerd.sock ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'crictl --runtime-endpoint unix:///run/containerd/containerd.sock logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or higher

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

从日志输出来看,首先抓住重点:

The kubelet is not running

``The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)`

从这两句来看,我们的kubelet似乎并没有成功启动,让我们看看kubelet的状态?

systemctl status kubelet

root@openstackTest-1:~# systemctl status kubelet.service

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor preset: enabl>

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since Sat 2023-09-02 09:43:0>

Docs: https://kubernetes.io/docs/home/

Process: 206088 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG>

Main PID: 206088 (code=exited, status=1/FAILURE)

2

3

4

5

6

7

8

9

可以看出这里我们的kubelet服务一直处于自动重启的一个状态。那么如何查询到更加详细的日志?

journalctl -xeu kubelet

通过该命令,我们定位到以下的日志信息:

Sep 02 09:46:58 openstackTest-1 kubelet[207581]: E0902 09:46:58.025261 207581 cri_stats_provider.go:448] "Failed to get the info of the filesystem with mountpoint" err="unable to >

Sep 02 09:46:58 openstackTest-1 kubelet[207581]: E0902 09:46:58.025389 207581 kubelet.go:1431] "Image garbage collection failed once. Stats initialization may not have completed y>

Sep 02 09:46:58 openstackTest-1 kubelet[207581]: I0902 09:46:58.025703 207581 server.go:462] "Adding debug handlers to kubelet server"

Sep 02 09:46:58 openstackTest-1 kubelet[207581]: I0902 09:46:58.026158 207581 fs_resource_analyzer.go:67] "Starting FS ResourceAnalyzer"

Sep 02 09:46:58 openstackTest-1 kubelet[207581]: I0902 09:46:58.026302 207581 volume_manager.go:291] "Starting Kubelet Volume Manager"

Sep 02 09:46:58 openstackTest-1 kubelet[207581]: E0902 09:46:58.026450 207581 kubelet_node_status.go:458] "Error getting the current node from lister" err="node \"openstacktest-1\>

Sep 02 09:46:58 openstackTest-1 kubelet[207581]: I0902 09:46:58.026545 207581 desired_state_of_world_populator.go:151] "Desired state populator starts to run"

Sep 02 09:46:58 openstackTest-1 kubelet[207581]: I0902 09:46:58.026668 207581 reconciler_new.go:29] "Reconciler: start to sync state"

Sep 02 09:46:58 openstackTest-1 kubelet[207581]: E0902 09:46:58.027099 207581 server.go:179] "Failed to listen and serve" err="listen tcp 0.0.0.0:10250: bind: address already in u>

Sep 02 09:46:58 openstackTest-1 systemd[1]: kubelet.service: Main process exited, code=exited, status=1/FAILURE

2

3

4

5

6

7

8

9

10

从上面的日志可以看出,我们的端口10250被占用。我们来看看是被什么占用了?

lsof -i:10250

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

kubelet 1095884 root 18u IPv6 81413558 0t0 TCP *:10250 (LISTEN)

2

震惊的发现,居然是自己占用了自己的端口?

我kill掉该进程后,过几秒后又会重启一个占用该端口。所以我这边直接使用apt把kubelet、kubectl、kubeadm删除掉。

apt remove kubeadm kubectl kubelet

删除后,我再看端口是否被占用:lsof -i:10250

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

kubelet 1092384 root 18u IPv6 8144232 0t0 TCP *:10250 (LISTEN)

2

依然被占用了,那么这就说明我的系统当中还存在其他的kubelet,老的kubelet一直占用端口,导致新的kubelet无法启动,所以我们的首要目标就是找到老的kubelet进行删除

法1:

- 使用

which kubelet找到对应路径,例如/path/to/your/kubectl。 - 删除kubectl文件:

sudo rm /path/to/your/kubectl - 确认删除:运行

which kubectl以确保kubectl不再出现在路径中。它应该返回空,表示kubectl不再可用。

法2:

snap list检查列表- 如果找到 kubelet,请使用

snap remove kubelet命令卸载它 - 重新检查端口:

lsof -i :10250

通过以上方法后,成功删除老板本的kubelet等一系列组件

bug 1: 系统以前安装过k8s等组件,导致按照本教程进行时,老组件占用了端口,新组件无法顺利安装。

解决办法:定位需要分配的端口是被哪个老组件占用的,删除老组件即可

# bug2 (无法在公网上监听端口导致)

上面问题解决过后,我们通过以下命令重新启动我们的k8s集群初始化

kubeadm reset

kubeadm init --config kubeadm.yaml

依然还是出现下面的错误

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all running Kubernetes containers by using crictl:

- 'crictl --runtime-endpoint unix:///run/containerd/containerd.sock ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'crictl --runtime-endpoint unix:///run/containerd/containerd.sock logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or higher

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

我们继续去看看kubelet的日志:

journalctl -xeu kubelet

Sep 02 12:23:04 openstackTest-1 kubelet[242796]: I0902 12:23:04.557335 242796 kubelet_node_status.go:70] "Attempting to register node" node="openstacktest-1"

Sep 02 12:23:04 openstackTest-1 kubelet[242796]: E0902 12:23:04.558539 242796 kubelet_node_status.go:92] "Unable to register node with API server" err="Post \"https://122.228.207.18:6443/api/v1/nodes\": dial tcp 122.228.207.18:6443: connect: connection refused" node="openstacktest-1"

Sep 02 12:23:05 openstackTest-1 kubelet[242796]: I0902 12:23:05.521325 242796 scope.go:117] "RemoveContainer" containerID="1eb15347bbb843aeb0b42368a673afa843da38c3ff382a6a7e1740c765433f8c"

Sep 02 12:23:05 openstackTest-1 kubelet[242796]: E0902 12:23:05.553499 242796 controller.go:146] "Failed to ensure lease exists, will retry" err="Get \"https://122.228.207.18:6443/apis/coordination.k8s.io/v1/namespaces/kube-node-lease/leases/openstacktest-1?timeout=10s\": dial tcp 122.228.207.18:6443: connect: connection refused" interval="7s"

Sep 02 12:23:06 openstackTest-1 kubelet[242796]: E0902 12:23:06.628606 242796 eviction_manager.go:258] "Eviction manager: failed to get summary stats" err="failed to get node info: node \"openstacktest-1\" not found"

Sep 02 12:23:10 openstackTest-1 kubelet[242796]: I0902 12:23:10.521039 242796 scope.go:117] "RemoveContainer" containerID="0021271dbdddfa281641339a0b8537310e3fabd497ef82ecac15c6643dd86ad5"

Sep 02 12:23:10 openstackTest-1 kubelet[242796]: E0902 12:23:10.521952 242796 pod_workers.go:1300] "Error syncing pod, skipping" err="failed to \"StartContainer\" for \"etcd\" with CrashLoopBackOff: \"back-off 1m20s restarting failed container=etcd pod=etcd-openstacktest-1_kube-system(6de64f3ef971e99f29e7ad6866a8fb9c)\"" pod="kube-system/etcd-openstacktest-1" podUID="6de64f3ef971e99f29e7ad6866a8fb9c"

Sep 02 12:23:11 openstackTest-1 kubelet[242796]: I0902 12:23:11.561894 242796 kubelet_node_status.go:70] "Attempting to register node" node="openstacktest-1"

Sep 02 12:23:16 openstackTest-1 kubelet[242796]: E0902 12:23:16.629284 242796 eviction_manager.go:258] "Eviction manager: failed to get summary stats" err="failed to get node info: node \"openstacktest-1\" not found"

Sep 02 12:23:17 openstackTest-1 kubelet[242796]: E0902 12:23:17.278095 242796 event.go:289] Unable to write event: '&v1.Event{TypeMeta:v1.TypeMeta{Kind:"", APIVersion:""}, ObjectMeta:v1.ObjectMeta{Name:"openstacktest-1.1780fa204b118de6", GenerateName:"", Namespace:"default", SelfLink:"", UID:"", ResourceVersion:"", Generation:0, CreationTimestamp:time.Date(1, time.January, 1, 0, 0, 0, 0, time.UTC), DeletionTimestamp:<nil>, DeletionGracePeriodSeconds:(*int64)(nil), Labels:map[string]string(nil), Annotations:map[string]string(nil), OwnerReferences:[]v1.OwnerReference(nil), Finalizers:[]string(nil), ManagedFields:[]v1.ManagedFieldsEntry(nil)}, InvolvedObject:v1.ObjectReference{Kind:"Node", Namespace:"", Name:"openstacktest-1", UID:"openstacktest-1", APIVersion:"", ResourceVersion:"", FieldPath:""}, Reason:"Starting", Message:"Starting kubelet.", Source:v1.EventSource{Component:"kubelet", Host:"openstacktest-1"}, FirstTimestamp:time.Date(2023, time.September, 2, 12, 21, 16, 496645606, time.Local), LastTimestamp:time.Date(2023, time.September, 2, 12, 21, 16, 496645606, time.Local), Count:1, Type:"Normal", EventTime:time.Date(1, time.January, 1, 0, 0, 0, 0, time.UTC), Series:(*v1.EventSeries)(nil), Action:"", Related:(*v1.ObjectReference)(nil), ReportingController:"kubelet", ReportingInstance:"openstacktest-1"}': 'Post "https://122.228.207.18:6443/api/v1/namespaces/default/events": net/http: TLS handshake timeout'(may retry after sleeping)

Sep 02 12:23:21 openstackTest-1 kubelet[242796]: E0902 12:23:21.563517 242796 kubelet_node_status.go:92] "Unable to register node with API server" err="Post \"https://122.228.207.18:6443/api/v1/nodes\": net/http: TLS handshake timeout" node="openstacktest-1"

Sep 02 12:23:22 openstackTest-1 kubelet[242796]: E0902 12:23:22.555826 242796 controller.go:146] "Failed to ensure lease exists, will retry" err="Get \"https://122.228.207.18:6443/apis/coordination.k8s.io/v1/namespaces/kube-node-lease/leases/openstacktest-1?timeout=10s\": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)" interval="7s"

Sep 02 12:23:23 openstackTest-1 kubelet[242796]: I0902 12:23:23.520280 242796 scope.go:117] "RemoveContainer" containerID="0021271dbdddfa281641339a0b8537310e3fabd497ef82ecac15c6643dd86ad5"

Sep 02 12:23:23 openstackTest-1 kubelet[242796]: E0902 12:23:23.520743 242796 pod_workers.go:1300] "Error syncing pod, skipping" err="failed to \"StartContainer\" for \"etcd\" with CrashLoopBackOff: \"back-off 1m20s restarting failed container=etcd pod=etcd-openstacktest-1_kube-system(6de64f3ef971e99f29e7ad6866a8fb9c)\"" pod="kube-system/etcd-openstacktest-1" podUID="6de64f3ef971e99f29e7ad6866a8fb9c"

Sep 02 12:23:26 openstackTest-1 kubelet[242796]: E0902 12:23:26.432253 242796 certificate_manager.go:562] kubernetes.io/kube-apiserver-client-kubelet: Failed while requesting a signed certificate from the control plane: cannot create certificate signing request: Post "https://122.228.207.18:6443/apis/certificates.k8s.io/v1/certificatesigningrequests": read tcp 10.10.10.10:47642->122.228.207.18:6443: read: connection reset by peer

Sep 02 12:23:26 openstackTest-1 kubelet[242796]: E0902 12:23:26.629867 242796 eviction_manager.go:258] "Eviction manager: failed to get summary stats" err="failed to get node info: node \"openstacktest-1\" not found"

Sep 02 12:23:26 openstackTest-1 kubelet[242796]: I0902 12:23:26.957506 242796 scope.go:117] "RemoveContainer" containerID="1eb15347bbb843aeb0b42368a673afa843da38c3ff382a6a7e1740c765433f8c"

Sep 02 12:23:26 openstackTest-1 kubelet[242796]: I0902 12:23:26.960879 242796 scope.go:117] "RemoveContainer" containerID="b9b93654494444eb242e8fc4e4d2c270b7791705987801a10b4345dac46c25c8"

Sep 02 12:23:26 openstackTest-1 kubelet[242796]: E0902 12:23:26.962111 242796 pod_workers.go:1300] "Error syncing pod, skipping" err="failed to \"StartContainer\" for \"kube-apiserver\" with CrashLoopBackOff: \"back-off 40s restarting failed container=kube-apiserver pod=kube-apiserver-openstacktest-1_kube-system(8149da62ac5e89abb9a86a75fd1b8ca5)\"" pod="kube-system/kube-apiserver-openstacktest-1" podUID="8149da62ac5e89abb9a86a75fd1b8ca5"

Sep 02 12:23:27 openstackTest-1 kubelet[242796]: E0902 12:23:27.280012 242796 event.go:289] Unable to write event: '&v1.Event{TypeMeta:v1.TypeMeta{Kind:"", APIVersion:""}, ObjectMeta:v1.ObjectMeta{Name:"openstacktest-1.1780fa204b118de6", GenerateName:"", Namespace:"default", SelfLink:"", UID:"", ResourceVersion:"", Generation:0, CreationTimestamp:time.Date(1, time.January, 1, 0, 0, 0, 0, time.UTC), DeletionTimestamp:<nil>, DeletionGracePeriodSeconds:(*int64)(nil), Labels:map[string]string(nil), Annotations:map[string]string(nil), OwnerReferences:[]v1.OwnerReference(nil), Finalizers:[]string(nil), ManagedFields:[]v1.ManagedFieldsEntry(nil)}, InvolvedObject:v1.ObjectReference{Kind:"Node", Namespace:"", Name:"openstacktest-1", UID:"openstacktest-1", APIVersion:"", ResourceVersion:"", FieldPath:""}, Reason:"Starting", Message:"Starting kubelet.", Source:v1.EventSource{Component:"kubelet", Host:"openstacktest-1"}, FirstTimestamp:time.Date(2023, time.September, 2, 12, 21, 16, 496645606, time.Local), LastTimestamp:time.Date(2023, time.September, 2, 12, 21, 16, 496645606, time.Local), Count:1, Type:"Normal", EventTime:time.Date(1, time.January, 1, 0, 0, 0, 0, time.UTC), Series:(*v1.EventSeries)(nil), Action:"", Related:(*v1.ObjectReference)(nil), ReportingController:"kubelet", ReportingInstance:"openstacktest-1"}': 'Post "https://122.228.207.18:6443/api/v1/namespaces/default/events": dial tcp 122.228.207.18:6443: connect: connection refused'(may retry after sleeping)

Sep 02 12:23:27 openstackTest-1 kubelet[242796]: W0902 12:23:27.435942 242796 reflector.go:535] vendor/k8s.io/client-go/informers/factory.go:150: failed to list *v1.Service: Get "https://122.228.207.18:6443/api/v1/services?limit=500&resourceVersion=0": dial tcp 122.228.207.18:6443: connect: connection refused - error from a previous attempt: read tcp 10.10.10.10:47696->122.228.207.18:6443: read: connection reset by peer

Sep 02 12:23:27 openstackTest-1 kubelet[242796]: E0902 12:23:27.436093 242796 reflector.go:147] vendor/k8s.io/client-go/informers/factory.go:150: Failed to watch *v1.Service: failed to list *v1.Service: Get "https://122.228.207.18:6443/api/v1/services?limit=500&resourceVersion=0": dial tcp 122.228.207.18:6443: connect: connection refused - error from a previous attempt: read tcp 10.10.10.10:47696->122.228.207.18:6443: read: connection reset by peer

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

定位:

"Unable to register node with API server" err="Post \"https://122.228.207.18:6443/api/v1/nodes\": net/http: TLS handshake timeout" node="openstacktest-1"

………………

2

从所有的情况来看似乎是6443打不通?难道是防火墙或者对应的安全组没有打开?

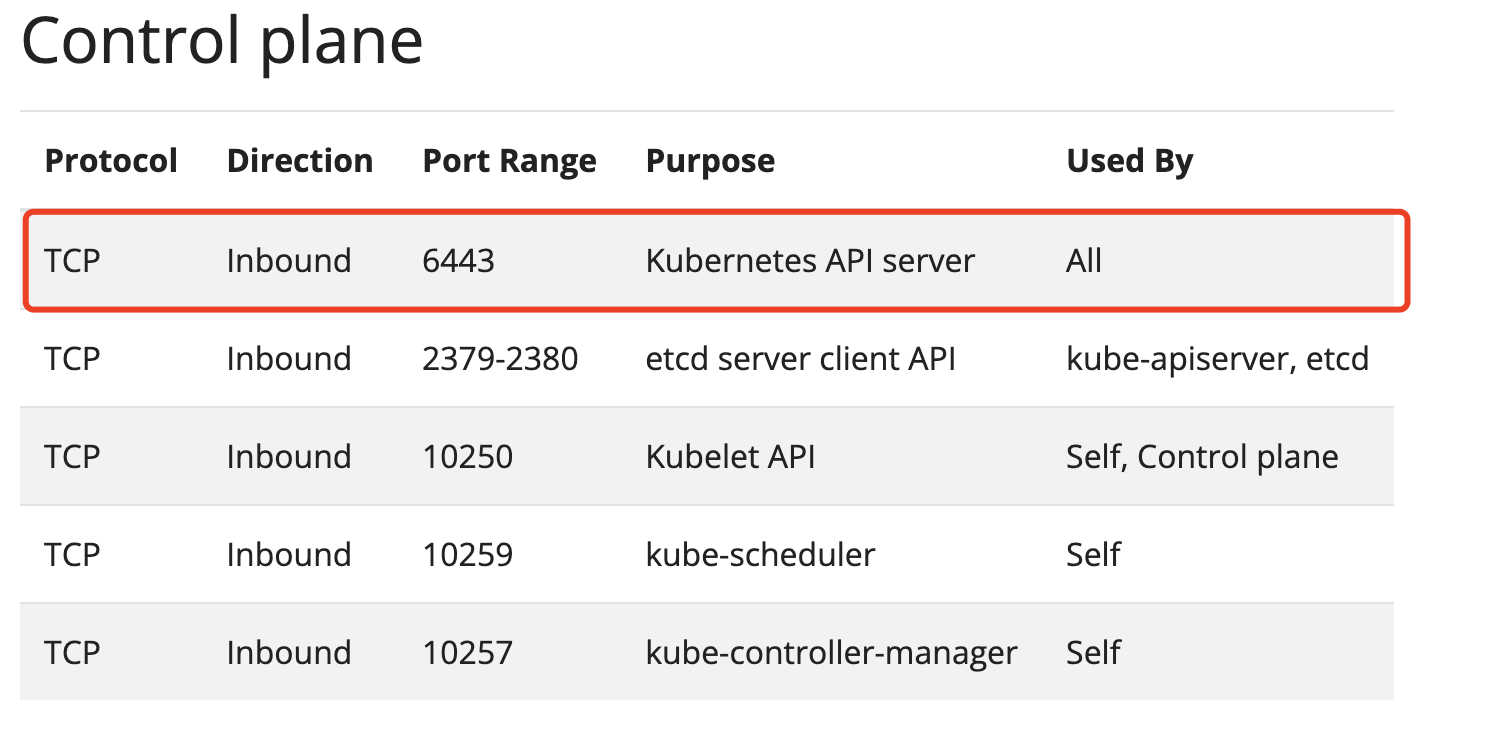

需要打开对应的端口,物理机设置相关的防火墙,如果是云虚拟机就去对应的供应商打开安全组。打开哪些端口?Ports and Protocols (opens new window)

打开后,还是出现这个错误………………

我们需要一种方法验证这个端口到底通不通。可以使用nc命令。我们重启kubeadm初始化。

kubeadm reset

kubeadm init --config kubeadm.yaml

并在一台主机上调用命令nc -vz 122.228.207.18 6443

zhengjian@zhengjiandeMacBook-Pro ~/Desktop> nc -vz 122.228.207.18 6443

Connection to 122.228.207.18 port 6443 [tcp/sun-sr-https] succeeded!

2

表示端口开放是成功的,而且确实有应用在监听这个端口。那为什么在日志中调不通这个端口?

隔了几秒钟后我又试试了这个nc命令

zhengjian@zhengjiandeMacBook-Pro ~/Desktop> nc -vz 122.228.207.18 6443

nc: connectx to 122.228.207.18 port 6443 (tcp) failed: Connection refused

2

突然,调不通了。这是为什么呢?

可能是监听这个端口的应用启动后又失败了。

那么监听这个端口的到底是什么应用呢?

看官网:Ports and Protocols (opens new window)

在k8s中, apiserver是核心组件,如果api-server都启动不了,其他应用是绝对无法使用的。

那么现在我们目标就清晰了:定位api-server不能启动的原因

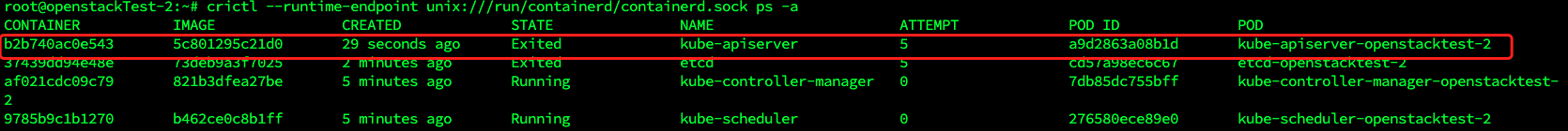

api-server是由我们的containerd来加载的。我们可以使用相关的api来查看【crictl(Container Runtime Interface Command Line Tool)】

### 查看containerd中容器的运行情况

crictl --runtime-endpoint unix:///run/containerd/containerd.sock ps -a

2

看来,我们的api-server确实失败了,我们需要看看api-server失败的原因。使用以下命令:

### 查看某个容器具体的启动日志

crictl --runtime-endpoint unix:///run/containerd/containerd.sock logs <CONTAINERID>

2

crictl --runtime-endpoint unix:///run/containerd/containerd.sock logs 37439dd94e48e

结果如下:

root@openstackTest-2:~# crictl --runtime-endpoint unix:///run/containerd/containerd.sock logs ca73661747c3f

I0902 06:56:45.402383 1 options.go:220] external host was not specified, using 122.228.207.7

I0902 06:56:45.404381 1 server.go:148] Version: v1.28.1

I0902 06:56:45.404461 1 server.go:150] "Golang settings" GOGC="" GOMAXPROCS="" GOTRACEBACK=""

I0902 06:56:45.733856 1 shared_informer.go:311] Waiting for caches to sync for node_authorizer

I0902 06:56:45.749543 1 plugins.go:158] Loaded 12 mutating admission controller(s) successfully in the following order: NamespaceLifecycle,LimitRanger,ServiceAccount,NodeRestriction,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,RuntimeClass,DefaultIngressClass,MutatingAdmissionWebhook.

I0902 06:56:45.749585 1 plugins.go:161] Loaded 13 validating admission controller(s) successfully in the following order: LimitRanger,ServiceAccount,PodSecurity,Priority,PersistentVolumeClaimResize,RuntimeClass,CertificateApproval,CertificateSigning,ClusterTrustBundleAttest,CertificateSubjectRestriction,ValidatingAdmissionPolicy,ValidatingAdmissionWebhook,ResourceQuota.

I0902 06:56:45.749995 1 instance.go:298] Using reconciler: lease

W0902 06:56:45.752333 1 logging.go:59] [core] [Channel #1 SubChannel #2] grpc: addrConn.createTransport failed to connect to {

"Addr": "127.0.0.1:2379",

"ServerName": "127.0.0.1",

"Attributes": null,

"BalancerAttributes": null,

"Type": 0,

"Metadata": null

}. Err: connection error: desc = "transport: Error while dialing: dial tcp 127.0.0.1:2379: connect: connection refused"

W0902 06:56:46.744804 1 logging.go:59] [core] [Channel #3 SubChannel #5] grpc: addrConn.createTransport failed to connect to {

"Addr": "127.0.0.1:2379",

"ServerName": "127.0.0.1",

"Attributes": null,

"BalancerAttributes": null,

"Type": 0,

"Metadata": null

}. Err: connection error: desc = "transport: Error while dialing: dial tcp 127.0.0.1:2379: connect: connection refused"

W0902 06:56:46.745307 1 logging.go:59] [core]

……………………………………

……………………………………

F0902 06:57:05.752085 1 instance.go:291] Error creating leases: error creating storage factory: context deadline exceeded

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

定位关键点:

W0902 06:56:45.752333 1 logging.go:59] [core] [Channel #1 SubChannel #2] grpc: addrConn.createTransport failed to connect to {

"Addr": "127.0.0.1:2379",

"ServerName": "127.0.0.1",

"Attributes": null,

"BalancerAttributes": null,

"Type": 0,

"Metadata": null

}. Err: connection error: desc = "transport: Error while dialing: dial tcp 127.0.0.1:2379: connect: connection refused"

2

3

4

5

6

7

8

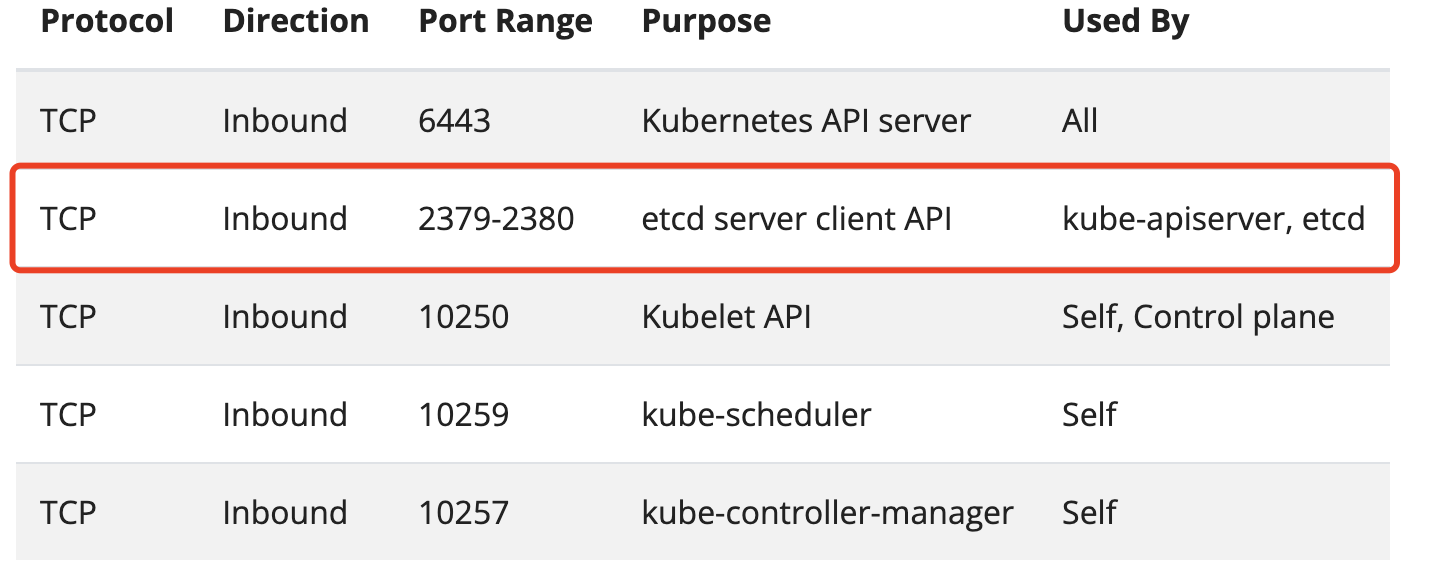

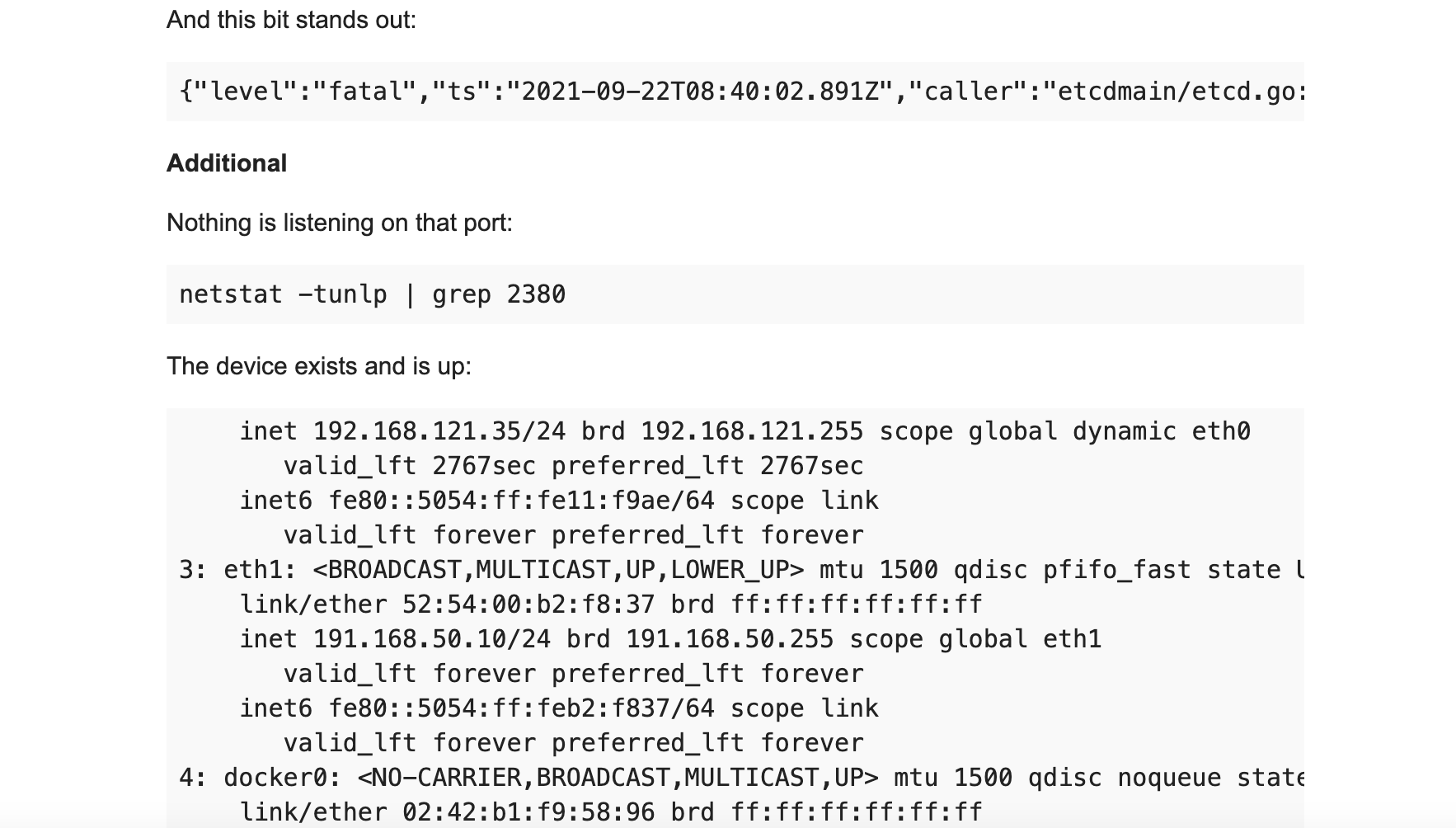

看来是监听2379相关的端口服务可能没有启动成功?是什么服务?看文档!

这是etcd的端口,看来etcd也没有启动成功:

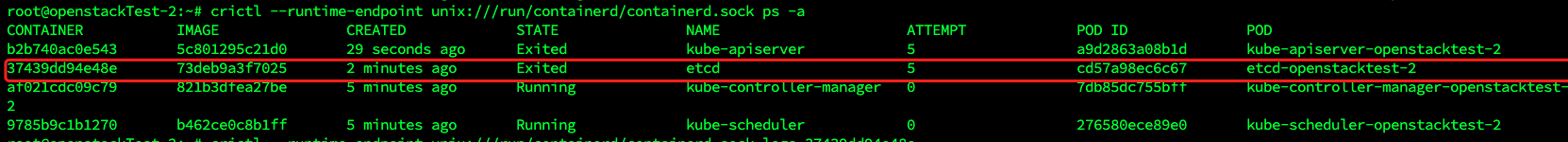

那么我们再去etcd日志去看看

crictl --runtime-endpoint unix:///run/containerd/containerd.sock logs 37439dd94e48e

root@openstackTest-2:~# crictl --runtime-endpoint unix:///run/containerd/containerd.sock logs 37439dd94e48e

{"level":"warn","ts":"2023-09-02T06:58:02.293956Z","caller":"embed/config.go:673","msg":"Running http and grpc server on single port. This is not recommended for production."}

{"level":"info","ts":"2023-09-02T06:58:02.294183Z","caller":"etcdmain/etcd.go:73","msg":"Running: ","args":["etcd","--advertise-client-urls=https://122.228.207.7:2379","--cert-file=/etc/kubernetes/pki/etcd/server.crt","--client-cert-auth=true","--data-dir=/var/lib/etcd","--experimental-initial-corrupt-check=true","--experimental-watch-progress-notify-interval=5s","--initial-advertise-peer-urls=https://122.228.207.7:2380","--initial-cluster=openstacktest-2=https://122.228.207.7:2380","--key-file=/etc/kubernetes/pki/etcd/server.key","--listen-client-urls=https://127.0.0.1:2379,https://122.228.207.7:2379","--listen-metrics-urls=http://127.0.0.1:2381","--listen-peer-urls=https://122.228.207.7:2380","--name=openstacktest-2","--peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt","--peer-client-cert-auth=true","--peer-key-file=/etc/kubernetes/pki/etcd/peer.key","--peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt","--snapshot-count=10000","--trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt"]}

{"level":"warn","ts":"2023-09-02T06:58:02.294331Z","caller":"embed/config.go:673","msg":"Running http and grpc server on single port. This is not recommended for production."}

{"level":"info","ts":"2023-09-02T06:58:02.294354Z","caller":"embed/etcd.go:127","msg":"configuring peer listeners","listen-peer-urls":["https://122.228.207.7:2380"]}

{"level":"info","ts":"2023-09-02T06:58:02.29444Z","caller":"embed/etcd.go:495","msg":"starting with peer TLS","tls-info":"cert = /etc/kubernetes/pki/etcd/peer.crt, key = /etc/kubernetes/pki/etcd/peer.key, client-cert=, client-key=, trusted-ca = /etc/kubernetes/pki/etcd/ca.crt, client-cert-auth = true, crl-file = ","cipher-suites":[]}

{"level":"info","ts":"2023-09-02T06:58:02.294684Z","caller":"embed/etcd.go:376","msg":"closing etcd server","name":"openstacktest-2","data-dir":"/var/lib/etcd","advertise-peer-urls":["https://122.228.207.7:2380"],"advertise-client-urls":["https://122.228.207.7:2379"]}

{"level":"info","ts":"2023-09-02T06:58:02.294705Z","caller":"embed/etcd.go:378","msg":"closed etcd server","name":"openstacktest-2","data-dir":"/var/lib/etcd","advertise-peer-urls":["https://122.228.207.7:2380"],"advertise-client-urls":["https://122.228.207.7:2379"]}

{"level":"warn","ts":"2023-09-02T06:58:02.294721Z","caller":"etcdmain/etcd.go:146","msg":"failed to start etcd","error":"listen tcp 122.228.207.7:2380: bind: cannot assign requested address"}

{"level":"fatal","ts":"2023-09-02T06:58:02.294752Z","caller":"etcdmain/etcd.go:204","msg":"discovery failed","error":"listen tcp 122.228.207.7:2380: bind: cannot assign requested address","stacktrace":"go.etcd.io/etcd/server/v3/etcdmain.startEtcdOrProxyV2\n\tgo.etcd.io/etcd/server/v3/etcdmain/etcd.go:204\ngo.etcd.io/etcd/server/v3/etcdmain.Main\n\tgo.etcd.io/etcd/server/v3/etcdmain/main.go:40\nmain.main\n\tgo.etcd.io/etcd/server/v3/main.go:31\nruntime.main\n\truntime/proc.go:250"}

2

3

4

5

6

7

8

9

10

关键点:

error":"listen tcp 122.228.207.18:2380: bind: cannot assign requested address"

这个错误表明 etcd 试图监听 IP 地址 122.228.207.18 的端口 2380,但无法分配请求的地址。这通常是因为配置中指定的 IP 地址与节点实际的 IP 地址不匹配或不可用。

这就很奇怪了,监听不了端口,我们看看这个端口有被占用吗?

lsof -i:2380

结果别没有被占用,那是什么原因呢?我开始google找答案:

ifconfig lo up 是什么意思

ifconfig命令用于配置和显示网络接口的信息,lo是本地回环接口的名称。ifconfig lo up的意思是将本地回环接口 (lo) 启用或激活。本地回环接口是一个特殊的网络接口,通常用于本地主机内部的网络通信和自我测试,它对应于 IP 地址127.0.0.1,也被称为localhost。通过运行

ifconfig lo up,你启用了本地回环接口,允许主机内的进程通过127.0.0.1来进行本地通信。这对于许多网络应用程序和服务非常重要,因为它们可以通过本地回环接口与自身或其他在同一主机上运行的服务进行通信,而不需要通过物理网络适配器。要注意的是,大多数现代 Linux 系统已经自动启用了本地回环接口,因此通常不需要手动运行

ifconfig lo up。

上面来看是127.0.0.1的网络接口没开,导致端口打开失败吗?那我也会不会是122.228.207.18 对应的网络接口没开?

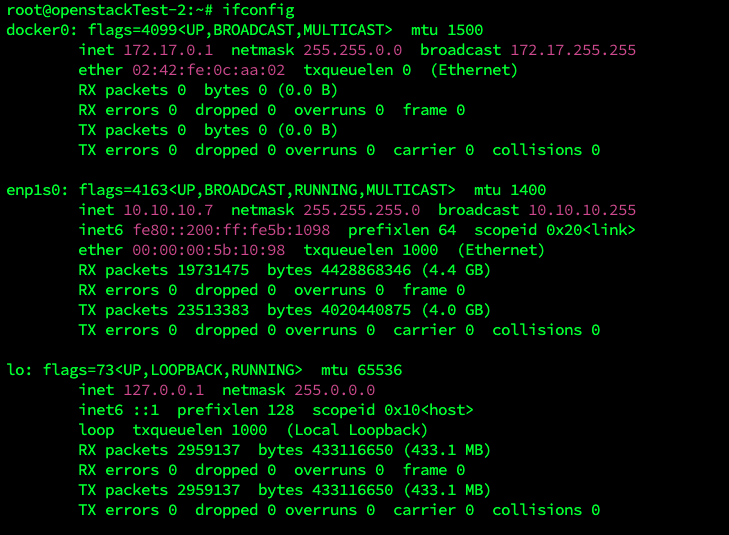

看到这儿展示了网络的相关信息,更加坚定我去使用ifconfig看看

一看大吃一惊

相应的122.228.207.18的信息居然没有,只有一个云服务器的私有地址

我在云厂商买了个云服务器,有公网ip和私网ip。但是当我用ssh登录到主机,用ifconfig时,相关的内容只有私网ip,没有公网ip,这是怎么回事?

- chatGpt:

如果你在云服务器上使用

ifconfig命令时只看到私网 IP 而没有看到公网 IP,这可能是因为云服务器默认情况下可能不会在ifconfig的输出中显示公网 IP 地址。公网 IP 地址通常与外部网络接口关联,而ifconfig通常只显示主机的网络接口信息。你可以尝试使用其他命令来查看公网 IP 地址,例如

curl ifconfig.me或curl ipinfo.io。这些命令将向外部服务器发出请求,以获取你的公网 IP 地址。另外,你还可以查看网络配置文件,通常在/etc/network/interfaces或/etc/sysconfig/network-scripts/,以查看服务器的网络配置,其中可能包含有关公网 IP 地址的信息。如果你使用的是特定云服务提供商的云服务器,还可以查看他们的管理控制台或控制面板,通常可以在那里找到有关服务器的详细信息,包括公网 IP 地址。

请注意,公网 IP 地址通常用于与互联网通信,而私网 IP 地址通常用于与其他云服务器或资源在同一云服务提供商内部进行通信。因此,私网 IP 地址在服务器内部通信和云内部流量方面更常见,而公网 IP 地址则用于对外通信。

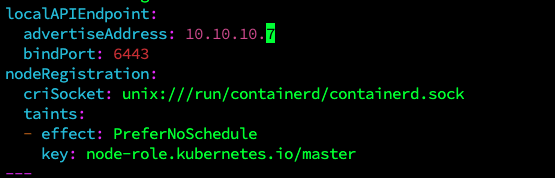

那我直接把kubeadm.yaml中的地址改为这个私有地址吧

kubeadm reset

kubeadm init --config kubeadm.yaml

root@openstackTest-2:~/k8s2# /usr/bin/kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.28.1

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local openstacktest-2] and IPs [10.96.0.1 10.10.10.7]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost openstacktest-2] and IPs [10.10.10.7 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost openstacktest-2] and IPs [10.10.10.7 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 9.005107 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node openstacktest-2 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node openstacktest-2 as control-plane by adding the taints [node-role.kubernetes.io/master:PreferNoSchedule]

[bootstrap-token] Using token: gkklvh.z9it9ichjnqukpw2

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.10.10.7:6443 --token gkklvh.z9it9ichjnqukpw2 \

--discovery-token-ca-cert-hash sha256:1b50f115c95b80f1f169d5addadb3fa36562e4e9b0fead1f592a77977e3832dc

root@openstackTest-2:~/k8s2#

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

顺利初始化。

https://www.gbase8.cn/12320